Kubernetes Security Master Guide

TABLE OF CONTENTS

Introduction to Kubernetes Security

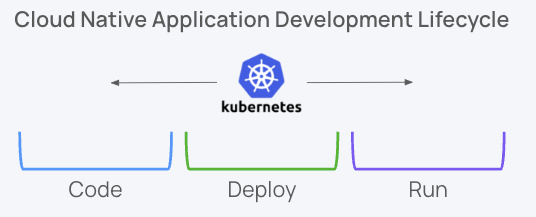

Kubernetes has emerged as the cornerstone of modern cloud-native applications, revolutionizing how these applications are deployed, scaled, and managed. As an open-source container orchestration platform, Kubernetes automates the deployment and management of containerized applications, making it easier for organizations to implement and manage scalable, resilient, and portable applications across various computing environments.

Scope of Kubernetes Security

In cloud-native security, the term “Kubernetes Security,” is used to describe a wide range of capabilities. For example, it could be used to describe a niche cloud-native security vendor focused only on runtime or a tool that deals specifically with Kubernetes configurations. Both of these descriptions would be accurate because Kubernetes reaches far across the network, cloud, CI/CD process, and identity, as well as having its own unique set of security requirements.

Roles of Kubernetes in Cloud-Native Applications

Kubernetes has redefined the way organizations build, deploy, and manage applications at scale, offering a robust platform that caters to the diverse and dynamic demands of cloud-native environments. From automating deployment processes to managing complex workloads, Kubernetes provides a solution that addresses several key challenges associated with cloud-native applications:

- Container Orchestration: At its core, Kubernetes efficiently manages containerized applications, ensuring they are deployed, scaled, and operated in a consistent and automated manner.

- Scalability: Kubernetes excels in enabling applications to scale seamlessly in response to varying loads, optimizing resource utilization and performance.

- High Availability: With features like self-healing, load balancing, and rolling updates, Kubernetes enhances the availability and resilience of applications, minimizing downtime and ensuring continuous operation.

- Service Discovery and Load Balancing: Kubernetes simplifies the process of connecting and balancing loads among different application components, thereby optimizing resource allocation and user experience.

- Automated Rollouts and Rollbacks: It provides mechanisms for automated updates and rollbacks, enabling agile and secure deployment practices.

- Storage Orchestration: Kubernetes streamlines storage orchestration, allowing applications to automatically mount the storage system of choice, whether from local storage, public cloud providers, or network storage systems.

- Secret and Configuration Management: It securely manages sensitive information such as passwords, OAuth tokens, and SSH keys, making it easier to deploy and update application configurations without rebuilding container images.

- How Kubernetes-first, cloud native security gives earlier detection and better efficiency

Who is Responsible for Kubernetes Security?

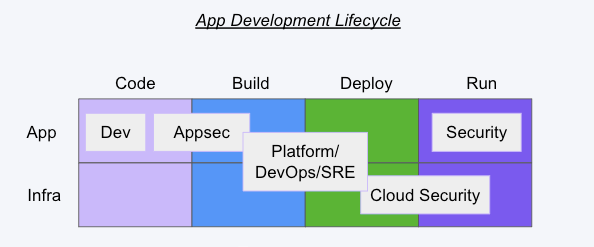

Depending on the size of an organization, responsibility for Kubernetes security is oftentimes shared among several roles and/or teams, each contributing to different aspects of securing the Kubernetes environment.

Common roles involved in Kubernetes security include:

- DevOps Teams may be responsible for implementing and managing the deployment pipelines, ensuring security is integrated into the CI/CD process.

- Infrastructure Security Engineers may be tasked with protecting the underlying infrastructure that supports Kubernetes clusters.

- IT Security Teams may oversee the IT infrastructure, which includes Kubernetes clusters. They may define policies, conduct security audits, and respond to security incidents.

- Chief Information Security Officers - CISOs Play a strategic role in overseeing the overall security posture of the organization, including Kubernetes environments. They are responsible for setting security policies, ensuring compliance with regulations, and guiding the organization in responding to emerging security threats.

- Cloud Security Teams are focused specifically on securing cloud-based resources, including Kubernetes clusters hosted on the cloud. They may be responsible for securing the cloud infrastructure, network configurations, and access controls.

- SREs (Site Reliability Engineers) may ensure Kubernetes clusters are set up correctly and securely, monitoring the clusters' health and performance, and applying patches and updates.

- Security Architects design and oversee the implementation of a security framework for Kubernetes environments.

- Compliance and Risk Management Teams ensure that Kubernetes deployments comply with relevant regulations and standards. They are responsible for risk assessments and compliance audits.

In practice, Kubernetes security is a collaborative effort that requires coordination and communication among various teams and roles (DevSecOps). An organization needs to establish clear policies, provide proper training, and ensure that all stakeholders are aware of their responsibilities in maintaining the security of the Kubernetes environment.

Translating Security Concepts to Kubernetes Security

Are your cybersecurity communications Kubernetes-fluent, or is it time to revamp your vocabulary for better alignment? This article translates traditional security terms into their Kubernetes-specific ones. By grasping these Kubernetes-centric terms and concepts, you will enhance your ability to liaise with others familiar with Kubernetes, fostering better collaboration and communication within your organization

Incident Detection and Response

Traditional Security

In traditional cybersecurity, incident detection and response are core components of an organization’s defense, aiming to swiftly pinpoint and neutralize security breaches to minimize damage and prevent future incidents. Software tools like intrusion detection systems (IDS) and security information and event management (SIEM) monitor unauthorized activities through the network and log analysis, aided by threat intelligence, for better accuracy.

Once a threat is identified, a structured response isolates affected systems, eliminates the threat, and reinstates operations. Security teams also perform forensic analysis to understand the breach and strengthen defenses.

Kubernetes Translation: Ingress and Egress Controls, and K8s Auditing and Logging

Within the context of Kubernetes, incident detection and response utilize Ingress and Egress controls, detailed auditing, and logging to oversee and protect data flow within the cluster. Ingress controls restrict entry to cluster services, allowing only vetted requests, while Egress controls monitor and regulate outgoing traffic, blocking potentially harmful external connections.

Key to incident detection and response in Kubernetes is its auditing and logging capabilities, which generate records of cluster activities. These logs provide insights into operational behaviors and potential security irregularities, enabling security teams to identify unauthorized resource access, unexpected configuration tweaks, and other red flags indicative of security incidents.

It is also important to look at runtime security, which involves monitoring applications while running to spot and stop threats immediately. This is especially key for apps that are containerized or packaged up in containers because they can have unique security needs.

Advanced protection, like RAD Security, can also help by watching over these running apps and responding fast to any signs of trouble.

This holistic approach—melding Ingress and Egress controls with comprehensive auditing, logging, and advanced runtime security—equips organizations with the tools to effectively detect, analyze, and counteract threats in Kubernetes environments.

Guardrails and 'Shift-Left' Approach

Traditional Security

The guardrails concept and 'shift-left' approach in traditional cybersecurity advocate for embedding security measures right from the software development outset, emphasizing early integration of security protocols. This strategy ensures potential risks are addressed early on, significantly reducing the likelihood of vulnerabilities and the associated remediation costs.

By prioritizing security from the design and development phases, this approach enhances the security posture of applications and cultivates a proactive security culture within development teams.

Kubernetes Translation

In Kubernetes, the 'shift-left' philosophy is key to building security into containerized applications from the very beginning. Here's how it's done:

- Kubelet Security: The Kubelet, an important Kubernetes node agent, needs tight security. By implementing authentication and authorization, and ensuring its API is securely accessible, we drastically reduce the chances of malicious exploitation that could compromise the cluster.

- Secrets Management: Secrets like tokens, passwords, and keys are always key targets, so they should be encrypted when stored and transmitted. Kubernetes offers inherent features for this, notably through Kubernetes Secrets for secure storage and transmission, and etcd encryption for data at rest.

- Admission Control: This feature is a gatekeeper for the Kubernetes API, inspecting and potentially altering incoming requests. It's an effective way to apply security policies right from the start, ensuring only secure configurations make it into the cluster, preventing vulnerable setups.

- Security Boundaries and Policies: Setting clear boundaries with namespaces and network policies helps isolate workloads, controlling who can communicate with whom. SecurityContext and Kubernetes Security Policies allow for granular security settings for pods and containers, such as enforcing the principle of least privilege by running containers as non-root users.

Together, these mechanisms protect secrets from unauthorized access and potential breaches.

Identity Management

Traditional Security: The Role of Identity Management

Identity management plays a key role in keeping environments secure by ensuring only verified and approved people or systems can use tech resources. It involves a wide range of methods and tech tools to handle user identities, check who they are, and give them the right access based on their roles and permissions.

Good identity management practices stop unauthorized access, lower the chance of data leaks, and ensure rules and regulations are followed. By keeping control of identities in one place, organizations can make access control smoother, use strong ways to verify identity, and still give users a good experience without compromising security.

Kubernetes Translation: Authentication and Authorization Methods

Authentication

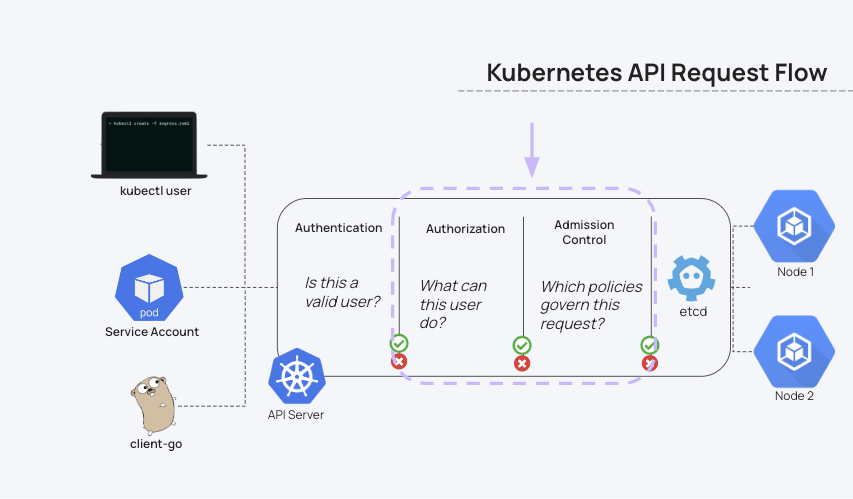

Identity management in Kubernetes is utilized for securing cluster resources and is achieved through sophisticated authentication and authorization methods. Authentication in Kubernetes is the first line of defense, ensuring that all requests to the Kubernetes API are authenticated. Multiple authentication mechanisms are supported, including static tokens, certificates, and third-party identity providers via OpenID Connect.

Service accounts are a Kubernetes-specific feature that provides an identity for processes running in a pod, enabling them to interact with the Kubernetes API securely. These accounts are tied to specific namespaces, providing a way to apply access controls to the resources within.

Authorization

Following authentication, authorization in Kubernetes determines what authenticated users or processes are allowed to do. Kubernetes employs Role-Based Access Control (RBAC) as a primary method for defining and applying access policies. With RBAC, access rights are granted to users or service accounts based on their roles within the organization, and policies are defined in terms of Kubernetes resources and actions (e.g., get, list, create, delete).

This allows developers and security engineers to define granular access controls that restrict what users and service accounts can do, enhancing the security posture of the Kubernetes environment.

Zero Trust by Design

Traditional Security: Core Principles of Zero Trust Architecture

Zero Trust architecture embodies the principle of "never trust, always verify" as its core tenet, fundamentally shifting away from conventional security models that assume everything inside the network is safe. This approach requires strict identity verification for every person and device attempting to access resources on a network, regardless of whether the access request comes from within or outside the network's boundaries.

Zero Trust minimizes the attack surface by enforcing least privilege access and segmenting the network to contain potential breaches. It integrates technologies and policies, including multi-factor authentication, encryption, and continuous network traffic monitoring, to ensure that security does not rely on static defenses but on real-time decision-making based on observed behaviors and attributes.

Kubernetes Translation: Applying Zero Trust Principles

When applied to Kubernetes, Zero Trust principles involve leveraging the platform's inherent capabilities for authentication and authorization to enforce strict access controls. By requiring all users and services to authenticate before accessing any resources, Kubernetes aligns with the Zero Trust model's mandate of "never trust, always verify."

Authentication mechanisms in Kubernetes, such as service accounts, certificates, and third-party tokens, provide a framework for verifying identities. Once authenticated, the authorization process, primarily through Role-Based Access Control (RBAC), ensures that each entity has the minimum necessary permissions to perform its functions. This practice of least privilege reduces the risk of unauthorized access and limits the potential damage from compromised accounts or services.

Addressing Kubernetes' "open by default" configurations aids in realizing a Zero Trust architecture. Many Kubernetes installations have settings that favor ease of use over security, potentially exposing resources to unnecessary risk.

Administrators must actively review and adjust configurations to secure these environments to close any unintended open access. This includes securing API endpoints, enabling network policies to restrict traffic between pods, and applying security contexts to enforce runtime constraints on container operations.

We've shown how everyday cybersecurity language fits into the world of Kubernetes, making it easier for you to discuss security issues with your team. Knowing these terms helps you spot and fix security risks faster.

Prerequisites To Implementing Kubernetes Security

Thinking about when to implement security for Kubernetes is much like any other field of technology. If you’re a Systems Administrator or Infrastructure Engineer, you must understand certain platforms and systems prior to securing them. For example, if you’re running workloads in ESXi, you must first understand ESXi to properly secure it. If you’re a developer, you have to first understand overall programming, computer science concepts, and application architecture prior to properly securing code with the absolute best practices.

All fields of focus, including Kubernetes, have prerequisites to fully understand how to properly secure the environment.

Next, we'll cover what you know should know prior to thinking about implementing security practices.

Understanding The Cluster Components

The most critical place to start, much like as a Systems Administrator or Infrastructure Engineer, is the cluster itself. The Kubernetes cluster has a wide range of components for both the control plane and the worker node.

Below is a full list with high-level explanations as the explanations could take up an entire chapter of a book in itself.

For the control plane, you have:

- API server: How you communicate with Kubernetes and how Kubernetes resources communicate with the cluster itself

- Etcd (cluster store): The database for Kubernetes. It stores all stateful data about the cluster.

- Controller Manager: All resources have a Controller (Deployments, Pods, Services, etc.). The Controller ensures that the current state is the desired state.

- Scheduler: Schedules Pods to run on certain worker nodes based on worker node consumption and availability.

For the worker node, you have:

- Kubelet: The agent that runs on each node. It registers each new node to the cluster. It also watches the API server for new tasks. For example, if a new Pod needs to be deployed.

- Container runtime: How containers know how to run and are able to run inside of Pods in Kubernetes.

- DNS: In the Kubernetes sense, DNS matches IP addresses to Kubernetes service names. DNS inside of Kubernetes is CoreDNS

- Kube-proxy: The internal networking of Kubernetes.

Without understanding each of the core components of Kubernetes, especially how they all communicate and operate with each other, means that you cannot properly secure them.

For example, by default, a lot of control planes when setting up Kubernetes in a cloud-based service have public IP addresses. That means your control plane is automatically, by default, at risk and exposed to the internet. If a malicious entity knows that, or can find the cluster on the internet, they can attempt brute force attacks which could cause issues like DDoS and entering your Kubernetes cluster where your entire application lives.

If you notice, a lot of the pieces that you must understand are all pieces that have existed for many years, way before Kubernetes. Infrastructure, operating systems, and virtual machines have needed security since the beginning and will continue to need security. APIs have always needed security. Databases have always needed security.

The only difference is the layers listed above are Kubernetes related, but they aren’t “Kubernetes specific”.

Understanding The Network

Networking in Kubernetes, much like networking for anything else in tech, is the core communication method for:

- Pods

- Services

- Worker nodes

- Control planes

Without networking set up, Kubernetes wouldn’t work. Without a network framework/plugin installed after configuring the control plane, none of the Kubernetes nodes would be in a “Ready” state as they need networking and communication methods to properly be deployed.

Source: https://kubernetes.io/images/blog/2018-06-05-11-ways-not-to-get-hacked/kubernetes-control-plane.png

Inside of Kubernetes, there are two networking components: kube-proxy and coreDNS.

Kube-proxy is the method of which Pods, Services, and other Kubernetes resources talk to and communicate with each other to send data back and forth.

The Kubernetes cluster has it’s own CIDR range, and Pods receive IP addresses from the available pool of IP addresses on the CIDR range. The CIDR range is defined by whoever, or whatever (the automation system), is creating the Kubernetes cluster. This in turn becomes the method at which Pods communicate with each other.

Kubernetes Services communicate with each other via IP addresses and DNS names. Services get IP addresses via the kube-proxy, same method as the Pods. They can also retrieve ClusterIPs and have dedicated ports, of which internal Kubernetes resources communication to Services can occur. Services have dedicated DNS names, which is why when outside entities communicate with an app running on Kubernetes, the communication is done via a Service.

This is all possible via the Container Network Interface (CNI), which is the available plugin standard for Kubernetes networking.

If you’re wondering about the actual servers themselves that run control planes and worker nodes - they communicate with each other outside of the kube-proxy. The communication for the servers occurs on the actual environment network. For example, if you’re running Kubernetes clusters in a data center, the servers that are running Kubernetes are talking to each other over the networking switch on the LAN. If your Kubernetes clusters are spanning across multiple data centers, traffic is being routed via a router or a firewall/router combination and packets are being sent to the network switches, which in turn send to the virtual machines running the Kubernetes cluster.

If you’ve worked with networking before, you’ll notice that everything explained here is networking-specific with a touch of “Kubernetes specific”. Meaning, 99% of networking in Kubernetes is general networking, which you must understand to properly secure the environment.

Understanding How Containers Work

Outside of the cluster/infrastructure/networking, is understanding how containers work.

Containers, by definition, are virtualized operating systems. Not to be confused with system virtualization, like ESXi or Hyper-V.

The gist is that system virtualization gave engineers a beautiful thing - they no longer had to dedicate an entire bare-metal server to one application, which in turn, frees up a ton of availability and usability out of one server. Instead of having one application per server, you could have five, or ten, or twenty, or however many applications that could fit on the server safely and without risking the server crashing due to too much memory and CPU consumption.

Containers took this concept a step further. Instead of virtualizing the hardware/bare metal, containers are virtualizing the operating system.

Source: https://www.docker.com/resources/what-container/

It works like this…

You have a server, maybe running Linux. Then, you have a container runtime running on Linux. Once the container runtime is running on Linux, you can start to deploy containers, which are lightweight versions of an operating system. Lightweight in this case means a container literally only has what it needs to run the application. For example, some container images don’t even have the ability to run `curl` or `wget`. They only have what’s needed.

Speaking of container images, a container image is the “golden image” of the container. You can then take that container image and deploy it across your container image into Pods.

Pods, in Kubernetes, run one or multiple containers (called sidecar containers). Containers and Pods share the same IP address, so when you’re communicating with a Pod, you’re communicating with the container running inside of the Pod.

The key piece to understanding containers is to know that it’s a lightweight version of an operating system that’s used to run a specific piece of an application, which are where microservices come into play.

When it comes to container security, you must ensure that:

- The application running inside of the container isn’t vulnerable.

- The container image only has exactly what it needs to run the app inside of the container image and nothing more.

- The Kubernetes configuration for the container has the proper securityContext settings to minimize or eliminate vulnerabilities such as privilege escalation or host file system and network access.

Understanding the overall complexity of not only Kubernetes but of networking and infrastructure is key to figuring out how to properly secure a Kubernetes cluster. Without understanding the underlying layers that make up Kubernetes, securing it will be out of reach. Without truly understanding networking and infrastructure at a deeper level, understanding the underlying Kubernetes layers will be out of reach. It’s a trickle effect from understanding operating systems, to understanding architecture, to understanding networking. Kubernetes may be “newish”, but how Kubernetes is architected and configured is not new.

Figuring out the security implications and architecture of Kubernetes isn’t easy, but it’s an absolute must if you plan on securing your environment.

The moral to the story? Understand how Kubernetes works underneath the hood and if you don’t already know infrastructure, learn it.

Understanding Kubernetes Architecture

Understanding the architecture of Kubernetes is fundamental to securing it. This section outlines the basic architectural components of Kubernetes, their default configurations, and their lifecycle. By comprehending how these elements interconnect and operate, you can identify potential security vulnerabilities and implement effective safeguards.

Kubernetes Architecture Basics

At the heart of Kubernetes' ability to manage containerized applications at scale is its distinctive and dynamic architecture. We will look at the core elements of this architecture, starting from the smallest unit, the Pod, to the broader constructs such as Nodes, Clusters, and the Control Plane. Each of these components plays a pivotal role in the orchestration and operation of containerized applications, and understanding their functions and interactions is key to both maximizing the efficiency of Kubernetes and securing its environments.

- Pods and Nodes: Pods are the smallest deployable units in Kubernetes, housing one or more containers. Nodes are worker machines in Kubernetes, which can be physical or virtual machines, where pods are scheduled and run.

- Clusters: A cluster is a set of nodes that run containerized applications. It includes at least one worker node and a control plane, which orchestrates container workloads.

- Control Plane Components: The control plane's components, including the kube-apiserver, kube-scheduler, kube-controller-manager, and cloud-controller-manager, manage the state of the cluster.

- Default Configurations: Many Kubernetes installations come with default configurations that may not be secure. It's crucial to review and adjust these settings to enhance security. (Refer to Preventing Misconfigurations for more details).

→ Learn more about Kubernetes Architecture

Lifecycle Management

Lifecycle management refers to the process of managing the lifecycle of resources within a Kubernetes cluster. This usually involves activities including initialization, scaling, monitoring, maintenance, patching, backups, disaster recovery, decommissioning, and cleanup.

→ Overview of the Kubernetes Deployment Lifecycle

Kubernetes Security Best Practices

There are many best practices guides available in the industry around Kubernetes security, for example the Kubernetes OWASP Top 10, DISA, NIST and the CIS benchmarks.

Both the Center for Internet Security (CIS) and the Defense Information Systems Agency (DISA) have published container security benchmarks and recommended practices. These documents provide detailed guidance on configuring your Kubernetes environment and protecting it against potential threats. They also act as industry-wide security standards trusted and accepted by many organizations.

The National Institute of Standards and Technology (NIST) has released a Special Publication (SP) NIST 800-190 checklist – Application Container Security Guide to help organizations secure their containerized applications and related infrastructure components.

In general, best practices include container security, hardening Kubernetes itself, runtime security, and if Kubernetes is deployed by a cloud managed service.

- Skip straight to the Kubernetes Security Checklist

Kubernetes Security Best Practices for Managed Cloud Providers

There are also best practices involved for securing the Kubernetes managed cloud providers like EKS, AKS and GKE. For example,

To secure your resources, you should consider using AWS security groups and network ACLs to limit access to specific ports. Additionally, enabling logging and audit trails will aid you in detecting any suspicious activity.

EKS multi-tenancy best practices are often considered when deploying multiple applications onto the same cluster. This typically involves setting resource limits for each application and ensuring network security between tenants.

Finally, data encryption and secrets management should be implemented for your Kubernetes clusters. This includes using encryption at rest and in transit and configuring Kubernetes secrets to securely store sensitive information. Following these AWS Kubernetes security best practices will make your container environment more secure and protected from potential threats.

In AKS, for example, you should use the Azure Kubernetes Service security features such as Transport Layer Security (TLS) and Pod Security Policies to ensure that traffic between services is encrypted and secure. Finally, you should enable logging and audit trails to monitor activity on your cluster. This will ensure that you can detect suspicious behavior and act accordingly.

Amazon Elastic Kubernetes Service (EKS) Best Practices

When using EKS, it’s vital to implement the best AWS EKS architecture best practices and the AWS EKS security best practices. This includes Identity and Access Management (IAM) policies, Pod Security Policies (PSPs), Runtime Security Policies (RSPs) that define the security configurations for your applications, network policies, Infrastructure security, and Data encryption.

Regarding IAM policies, you should tightly control who has access to your Kubernetes clusters. This can be done by creating roles and users with limited access and tagging all the resources within your clusters. In addition, you should create network policies that control ingress and egress traffic for your clusters. These EKS monitoring best practices will allow you to restrict access to specific ports, protocols, and IP addresses.

For pod and runtime security policies, you should implement policies that dictate the security settings for your applications. This involves setting user access restrictions and ensuring that all services are running in secure containers with appropriate security settings. Infrastructure security is also important when using EKS.

To secure your resources, you should consider using AWS security groups and network ACLs to limit access to specific ports. Additionally, enabling logging and audit trails will aid you in detecting any suspicious activity.

EKS multi-tenancy best practices are often considered when deploying multiple applications onto the same cluster. This typically involves setting resource limits for each application and ensuring network security between tenants.

Finally, data encryption and secrets management should be implemented for your Kubernetes clusters. This includes using encryption at rest and in transit and configuring Kubernetes secrets to securely store sensitive information. Following these AWS Kubernetes security best practices will make your container environment more secure and protected from potential threats.

Azure Kubernetes Security Best Practices

Azure is another powerful cloud platform for running and deploying Kubernetes clusters. Microsoft has provided a guide to establishing an Azure AKS security baseline, which provides a set of secure configuration settings for AKS clusters. This baseline includes recommendations on using RBAC (role-based access control) to restrict access to Kubernetes resources. It also includes suggestions on using Kubernetes security features such as Pod Security Policies and Network Policies.

To begin, you should secure your AKS deployment by setting up role-based access control (RBAC) to restrict access to Kubernetes resources. Accomplishing this is simply creating users and roles with limited access while using tags to identify resources.

The next step is to use Kubernetes security features such as Pod Security Policies and Network Policies to restrict access to specific pods and networks. This allows you to control which services can communicate with each other, as well as to set resource limits for each application.

You should also use the Azure Kubernetes Service security features such as Transport Layer Security (TLS) and Pod Security Policies to ensure that traffic between services is encrypted and secure. Finally, you should enable logging and audit trails to monitor activity on your cluster. This will ensure that you can detect suspicious behavior and act accordingly.

Lastly, it is important to understand the difference between cloud IAM and Kubernetes RBAC when using a managed Kubernetes platform.

Understanding the Kubernetes Threat Landscape

As Kubernetes cements its position as the backbone of containerized application deployment, it's imperative to recognize and understand the myriad of threats that this complex ecosystem faces. The Kubernetes threat landscape is diverse and ever-evolving, presenting unique challenges that stem from both the inherent architecture of Kubernetes and the sophisticated nature of modern cyber threats.

Common Kubernetes Vulnerabilities

As Kubernetes continues to dominate the container orchestration landscape, understanding its vulnerabilities is paramount for maintaining robust security.

- API Server Vulnerabilities arise from misconfigured or unprotected API servers, leading to unauthorized access.

- Container vulnerabilities are risks associated with container runtime environments, including container escape vulnerabilities.

- Network exposures are threats stemming from inadequate network policies or misconfigurations that expose internal services to the public internet.

Emerging Threats in Kubernetes Ecosystem

In the rapidly evolving world of Kubernetes and cloud-native technologies, new threats are revealed as quickly as the technologies themselves advance. In 2023 alone, there have been CVEs in third party plugins for Kubernetes that help with storage, admission control, managed providers, and more.

- Keep Tabs on Emerging Threats

- CVE-2023-38546 and CVE-2023-38545: Curl Bug Kubernetes Vulnerability

- Addressing a New Kubernetes Vulnerability in Kyverno: CVE-2023-34091

- Addressing a New Third-Party Kubernetes Vulnerability in Rancher: CVE-2023-22647

Container security considerations

Kubernetes security is critical, as containers continue to transform software development. As the new foundation for CI/CD, containers give you a fast, flexible way to deploy apps, APIs, and microservices with the scalability and performance digital success depends on. But containers and container orchestration tools such as Kubernetes are also popular targets for hackers — and if they’re not protected effectively, they can put your whole environment at risk.

As an application-layer construct relying on a shared kernel, a container can boot up much faster than a full VM. On the other hand, containers can also be configured much more flexibly than a VM, and can do everything from mounting volumes and directories to disabling security features. In a “container breakout” scenario, when container isolation mechanisms have been bypassed and additional privileges have been obtained on the host, the container can even run as root under the control of a hacker — and then you’re in real trouble.

Here are a few things you can do to keep the bad guys out of your containers.

Layer 0 – The Kernel

Kubernetes is an open source platform built to automate the deployment, scaling, and orchestration of containers, and configuring it properly can help you strengthen security. At the kernel level, you can:

- Review allowed system calls and remove any that are unnecessary or unwanted

- Verify your kernel versions are patched and contain no existing vulnerabilities

Container Security - Layer 1

At Rest

Container security at rest focuses on the image you’ll use to build your running container. First, reduce the container’s attack surface by removing unnecessary components, packages, and network utilities — the more stripped-down, the better. Consider using distroless images containing only your application and its runtime dependencies.

Next, make sure to pull your images only from known-good sources, and scan them for vulnerabilities and misconfigurations. Check their integrity throughout your CI/CD pipeline and build process, and verify and approve them before running to make sure hackers haven’t installed any backdoors.

Runtime

Once your image is packed up, it’s time for debugging. Ephemeral containers will let you debug running containers interactively, including distroless or other lightweight images that don’t have their own debugging utilities. Watch for anomalies and suspicious system-level events that might be indicators of compromise, such as an unexpected child process being spawned, a shell running inside a container, or a sensitive file being read unexpectedly. The Cloud Native Computing Foundation‘s Falco Project open source runtime security tool, and the many Falco rules files that have been created, are hugely useful for this.

Securing the Workload (Pod) - Layer 2

A pod, the unit of deployment inside Kubernetes, is a collection of containers that can share common security definitions and security-sensitive configurations. Pod Security Standards specify a set of policies with three levels of restriction; privileged, baseline and restrictive. These policies cover the following Kubernetes controls and more:

- Host Namespaces

- Privileged Containers

- HostPath volumes

- Seccomp

- Volume Types

- Running as Non-root

To strengthen basic defense at the pod level, you can use the Pod Security admission controller with the most restrictive policy set. For more flexibility and granular control over pod security, consider something like an Open Policy Agent (OPA), using the OPA Gatekeeper project. You will want to evaluate Kubernetes admission controllers carefully.

Kubernetes Networking - Layer 3

Compromised Workloads

By default, all pods can talk to all the other pods in a cluster without restriction, which makes things very interesting from an attacker’s perspective. If a workload is compromised, the attacker will likely try to probe the network and see what else they might be able to access. The Kubernetes API is also available to access from inside the pod, offering another rich target. And if you see traffic originating from a container in a cluster reaching out to a foreign IP that hasn’t been touched before, it’s not a good sign.

Strict network controls are a critical part of container hardening — pod to pod, cluster to cluster, outside-in, and inside-out. Use built-in Network Policies to isolate workload communication and build granular rulesets. Consider implementing a service mesh to control traffic between workloads as well as ingress/egress, such as by defining namespace-to-namespace traffic.

Application Layer (L7) Attacks – Server-Side Request Forgery (SSRF)

SSRF is consistently in the news when it relates to Kubernetes, and no wonder. With cloud native environments where APIs talk to other APIs, SSRF can be especially hard to stop; customer-supplied webhooks are especially notorious. Once a target has been found, SSRF can be used to escalate privileges and scan the local Kubernetes network and components; hit the cloud metadata endpoint; dump out the data on the Kubernetes metrics endpoint to learn valuable information about the environment — and potentially make it possible to take it over completely.

Application Layer (L7) Attacks – Remote Code Execution (RCE)

RCE is also extremely dangerous in cloud native environments, making it possible to run system-level commands inside a container to grab files, access the Kubernetes API, run image manipulation tools, and compromise the entire machine.

Application Layer (L7) Defenses

The first rule of protection is to adhere to secure coding and architecture practices — that can mitigate the majority of your risk. Beyond that, you can layer on network defenses along both axes: north-south, to monitor and block malicious external traffic to your applications and APIs; and east-west, to monitor traffic from container to container, cluster to cluster, and cloud to cloud to make sure you’re not being victimized by a compromised pod.

Node security - Layer 4

Node-level security isn’t quite as exciting as networking, but it’s just as important. To prevent container breakout on a VM or other node, limit external administrative access to nodes as well as the control plane, and watch out for open ports and services. Keep your base operating systems minimal, and harden them using CIS benchmarks. Finally, make sure to scan and patch your nodes just like any other VM.

Kubernetes Cluster Components - Layer 5

There are all kinds of things going on in a Kubernetes cluster, and there’s no all-in-one tools or strategy to secure it. At a high level, you should focus on:

- API Server – check your mechanisms for access control and authentication, and perform additional security checks of your dynamic webhooks, Pod Security Policy, and public network access to the Kubernetes API;

- Access control – use role-based access control (RBAC) to enforce the principle of least privilege for your API server and Kubernetes secrets

- Service account tokens – to prevent unauthorized access, limit permissions to service accounts as well as to any secrets where service account tokens are stored

- Audit logging – make sure this is enabled

- Third-party components – be careful about what you’re bringing into your cluster so you know what’s running there and why (for example with a KBOM)

- Kubernetes versions – Kubernetes can have vulnerabilities just like any other system, and has to be updated and patched promptly

- Kubelet misconfiguration – responsible for container orchestration and interactions with container runtime, Kubelets can be abused and attacked in an attempt to elevate privileges

Kubernetes Security Standards: CIS, DISA and NIST

To ensure the highest level of security for Kubernetes, it is essential to get acquainted with container security standards. Both the Center for Internet Security (CIS) and the Defense Information Systems Agency (DISA) have published container security benchmarks and recommended practices. These documents provide detailed guidance on configuring your Kubernetes environment and protecting it against potential threats. They also act as industry-wide security standards trusted and accepted by many organizations.

The National Institute of Standards and Technology (NIST) has released a Special Publication (SP) NIST 800-190 checklist – Application Container Security Guide to help organizations secure their containerized applications and related infrastructure components.

This insightful NIST container security guide will provide you with a thorough breakdown of how to securely and efficiently deploy and manage containers in an enterprise environment and tactics to ensure the integrity of your software supply chain.

The NIST Secure Software Development Framework (SSDF) has recently been released to give organizations a structured way of creating secure software systems. This framework works with NIST 800-190 container security checklist, offering direction on how to securely construct, deploy, and handle containerized applications.

In response to the recent executive order on supply chain security, NIST released its NIST 800-161 standard. This framework was created with vigilance in mind, describing a secure software supply chain management system that enables organizations to guarantee the integrity of all software components. SP 800-161 provides specific guidance for safely developing and deploying containers, including detailed recommendations on establishing efficient and secure DevOps processes. RAD Security has released the first Kubernetes Bill of Materials (KBOM) standard to help teams incorporate Kubernetes into their efforts around software supply chain security. To contribute, visit our Github repo.

Kubernetes Security Tools & Capabilities

Kubernetes is a technology that reaches into the CI/CD pipeline in the application development lifecycle, deploys containers, and determines many of the conditions under which workloads run along with the services they utilize. As such, there is an enormous variety of tools used that classify themselves as Kubernetes Security, from vulnerability scanning tools to posture management, runtime, identity and detection and response tools.

KSPM

Kubernetes Security Posture Management (KSPM) focuses on the continuous assessment and improvement of the security posture of Kubernetes environments. It involves identifying misconfigurations, enforcing security best practices, and monitoring compliance with regulatory and organizational standards. An example of these misconfigurations could include:

- Workloads that have been deployed with excessive privileges

- Open Kubernetes API configuration

- RBAC Misconfigurations

→ Hardening Kubernetes Clusters: KSPM Series Part I

→ A Tool for Incident Response: KSPM Series Part II

→ Defense in Depth: KSPM Series Part III

CNAPP

Cloud-Native Application Protection Platform (CNAPP) is an emerging category coined by Gartner analysts to describe a solution that spans from image scanning prior to deployment through to runtime and also some CSPM element. There is no clear definition of the exact features that must be required to qualify as a CNAPP, but in general, it should have capabilities across the entire application development lifecycle. Various vendors will have various levels of integrations across those pieces.

CWPP

A Cloud Workload Protection Platform (CWPP) is a security solution designed to protect workloads in cloud environments. This could include Endpoint Detection and Response (EDRs), XDRs as well, and might or might not secure containerized environments. The variety of what it secures includescontainers, serverless functions, and virtual machines.

→ Don’t Take Your nDR’s Word for it When it Comes to Kubernetes

SCA

Software Composition Analysis (SCA) involves scanning software components, especially open-source libraries and dependencies, for vulnerabilities. This is crucial in a Kubernetes environment where applications often rely on a mix of proprietary and open-source components.

KBOM

Kubernetes Bill of Materials (KBOM) is a detailed asset inventory or list of all components, libraries, and dependencies used in a Kubernetes application. This is helpful when trying to comply with the guidelines in the recent Biden Administration’s executive order, bringing Kubernetes into the picture, instead of just focusing on Software Bills of Material that are generated prior to deployment. A KBOM can help with vulnerability management, license compliance, and security audits.

→ RAD Security releases the first Kubernetes Bill of Materials (KBOM) standard

Admission Control

Kubernetes admission control is an important enforcement capability that can block misconfigured workloads from deploying at the Kubernetes API level, per security policies. You can use pod security admission, pod security standards (formerly known as pod security policies), or any number of third party admission controllers like Kyverno or OPA.

- Kubernetes Admission Controller Guide for Security Engineers

- How to Create a Kubernetes Admission Controller

Open Source

There are hundreds, if not thousands, of open source tools that offer observability, detection and response, logging, storage, and Kubernetes Security. These solutions offer a community-driven method to filling the needs in the ecosystem for running, maintaining and securing Kubernetes environments. Here is an awesome list of Kubernetes security tools to get started.

Robust Configuration and Best Practices

Configuring Kubernetes securely is not just a one-time task but a continuous process of alignment with best practices and vigilance against evolving threats. In this critical section of the RAD Security Kubernetes Security Master Guide, we examine the nuances of robust Kubernetes configuration and the best practices that ensure a strong security posture.

→ Prerequisites To Implementing Kubernetes Security

Preventing Misconfigurations

Misconfigurations in Kubernetes environments are a leading cause of security breaches and operational issues.

These misconfigurations can arise from a variety of sources, including inadequate defaults, human errors, or a lack of understanding of Kubernetes' complexities. Preventing these misconfigurations is crucial for maintaining the security and stability of Kubernetes clusters.

→ 8 Ways Kubernetes Comes Out of the Box Misconfigured for Security

Update and Patch Management

Regularly updating Kubernetes and associated components is crucial for maintaining security and stability. Effective update and patch management involves regularly applying updates not only to Kubernetes itself but also to its underlying infrastructure and applications.

Each Kubernetes version has various new implications for architecture, identity and security decisions.

Kubernetes version 1.29 Release

Kubernetes version 1.28 Release

Implementing RBAC

Role-Based Access Control (RBAC) is a method of regulating access to computer or network resources based on the roles of individual users within an organization. Implementing RBAC effectively in Kubernetes is essential for defining who can access what within the cluster.

Check out these resources for guidance on setting up RBAC to control access to resources and operations, thereby enhancing security and operational integrity:

→ RBAC vs Cloud IAM: What’s the Difference?

→ Download: The Top 5 Tips to Avoid Excessive Kubernetes RBAC Permissions

Vulnerability Scanning

Vulnerability scanning in Kubernetes is a critical component of a robust security strategy, involving the identification of security weaknesses within the cluster. This process encompasses scanning container images, Kubernetes configurations, and RBAC configurations to detect vulnerabilities that could be exploited by attackers. An intelligent scanning approach helps in early detection and remediation of security issues, thereby enhancing the overall security posture of Kubernetes deployments.

Types of Vulnerability Scanning

- Container Image Scanning: Examining container images for known vulnerabilities before they are deployed.

- Configuration Scanning: Assessing Kubernetes configuration files for potential misconfigurations or security risks (including RBAC).

Real-Time Vs. Log-Based Scanning

The methods of Kubernetes vulnerability scanning can generally be categorized into two types: Real-time scanning and log-based scanning. Each method has its distinct characteristics, advantages, and use cases. Understanding the differences between them is crucial for implementing an effective security strategy in Kubernetes environments.

Features of Real-Time Scanning via the K8s API

- Immediate Detection: Real-time scanning continuously monitors Kubernetes clusters, identifying vulnerabilities as they occur. This immediacy is crucial for detecting and mitigating threats quickly.

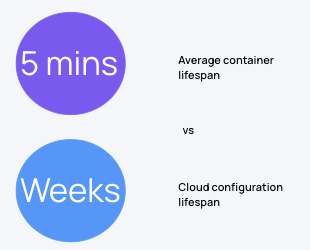

- Adaptability to Dynamic Environments: Kubernetes environments are highly dynamic, with containers frequently spinning up and down. Real-time scanning is adept at keeping pace with these changes, ensuring that short-lived containers are scanned before they terminate.

- Proactive Security Posture: It enables a proactive approach to security, where potential threats are identified and addressed in real-time, often before they can be exploited.

- Actionable Remediation: Real-time scanning provides instant feedback and actionable insights, allowing teams to respond to vulnerabilities swiftly and effectively.

Features of Log-Based Scanning (usually from cloud logs)

- Historical Data Analysis: Log-based scanning involves analyzing historical logs to identify vulnerabilities. This approach is beneficial for understanding past security incidents and trends over time.

- Delayed Detection: Unlike real-time scanning, log-based scanning does not provide immediate notification of vulnerabilities. This delay can be a significant drawback in environments where rapid response is critical.

- Strategic Insights: By examining historical data, log-based scanning can offer valuable insights for strategic security planning and compliance reporting.

- Trend and Pattern Recognition: It helps in recognizing patterns and trends in security threats, which can inform future security measures and policy development.

→ How to Improve Efficiency in Cloud-Native Security

→ You Can’t Secure Kubernetes Unless it’s in Real-Time

→ RAD Security Launches First Real-Time Kubernetes Security Posture Management (KSPM) Platform

Network Security and Isolation

Kubernetes offers various mechanisms to manage network traffic, control how applications communicate, and enforce isolation between different parts of the system. Proper implementation of these mechanisms is essential to protect against unauthorized access and network attacks, ensuring the security and integrity of the applications running within Kubernetes clusters.

Implementing Network Policies

Network policies are crucial for defining rules that govern ingress and egress traffic between pods within a cluster. They allow administrators to control which pods can communicate with each other, effectively reducing the potential attack surface.

Pod-to-Pod Isolation

Pod-to-Pod isolation ensures that individual pods can interact securely and as intended within a cluster. This isolation is key to preventing unauthorized access and communication between pods, which can be crucial for maintaining the integrity and security of different applications or services running on the same cluster.

Control of Ingress and Egress Traffic

The control of ingress and egress traffic is essential for managing how external traffic accesses services within the cluster (ingress) and how services within the cluster reach external resources (egress). This control is pivotal for enforcing security policies, preventing unauthorized access, and ensuring that data flows in and out of the cluster securely.

Service Mesh Implementation

Implementing a service mesh serves to enhance application networking by providing a dedicated infrastructure layer for handling inter-service communication. This implementation facilitates more complex operational requirements like service discovery, load balancing, failure recovery, and metrics tracking without adding additional code within the service applications themselves.

Network Segmentation and Firewalls

Network segmentation and the use of firewalls are critical for creating secure network boundaries within the cluster. This approach divides the network into smaller segments or zones, each with its own unique set of security policies and controls. This segmentation is instrumental in minimizing the attack surface and containing potential breaches within isolated network segments.

DDoS Protection and Rate Limiting

The implementation of DDoS protection is crucial for safeguarding the cluster against volumetric attacks aimed at overwhelming and incapacitating services. By deploying DDoS protection strategies, Kubernetes environments can maintain availability and performance even under high-traffic conditions or attack scenarios.

- Kubernetes Network Security 101- Why Micro-Segmentation is Key to Zero Trust in Kubernetes Security

Disaster Recovery and Data Backup

Disaster recovery and data backup provide a safety net against data loss and service interruptions, which can stem from various sources such as hardware failures, software bugs, or even cyber-attacks. This section will cover key strategies and practices for effective disaster recovery and data backup in Kubernetes environments.

Why Back Up Your Data?

Kubernetes, while robust, is not impervious to failures. The loss of data or service can be costly in terms of both time and resources. A disaster recovery and data backup plan ensures business continuity, minimizes downtime, and safeguards critical data.

Disaster Recovery Strategies

- High Availability Setup: Deploy Kubernetes clusters across multiple zones or regions. This ensures that if one zone goes down, the services can still run from another zone.

- Cluster Replication: Replicate critical components of the Kubernetes cluster, such as the etcd database, across different geographical locations.

- Failover and Failback Procedures: Establish clear procedures for failover to a backup system and failback to the primary system once the disaster is mitigated.

- Infrastructure as Code (IaC): Use IaC tools for quick and consistent infrastructure provisioning, which is vital for rapid recovery.

Monitoring and Alerts

Implement a robust monitoring and alerting system to detect anomalies and potential threats early. See Vulnerability Scanning for more details.

Integrating Secure Application Development

Integrating security early in the development process helps in identifying and mitigating vulnerabilities before they escalate into major issues. It aligns with the DevSecOps approach, where security is a shared responsibility among developers, operations, and security teams.

Here are a few key strategies for secure development:

- Secure Coding Practices: Educate and train developers in secure coding practices. This includes understanding common security pitfalls in coding and how to avoid them.

- Dependency Management: Regularly scan and update dependencies to mitigate vulnerabilities.

- Container Security: Secure container images by scanning for vulnerabilities and ensuring they are sourced from trusted registries. Implementing image signing and verification processes is also crucial.

- Implementing Kubernetes-specific policies: Define and enforce security policies using Kubernetes-native controls like Pod Security Admission

- Continuous Integration/Continuous Deployment (CI/CD) Security: Integrate security testing tools into the CI/CD pipeline. This includes static application security testing (SAST), dynamic application security testing (DAST), and infrastructure as code scanning.

- Secrets Management: Securely manage secrets like API keys and passwords. Utilize Kubernetes secrets or external secrets management tools like HashiCorp Vault.

Compliance and Policy Enforcement

With the increasing adoption of Kubernetes in various sectors, including those with stringent regulatory requirements like finance and healthcare, compliance becomes even more of a necessity. Ensuring that Kubernetes deployments comply with standards like GDPR, HIPAA, or PCI-DSS is essential for legal and operational integrity.

Key components of compliance and policy enforcement include:

- Understanding Regulatory Requirements: Start by thoroughly understanding the compliance requirements relevant to your industry. This includes data protection laws, industry-specific regulations, and best practices.

- Implementing Kubernetes-Specific Policies: Use Kubernetes-native tools like Role-Based Access Control (RBAC), Network Policies, and Pod Security Standards to enforce security and compliance policies within the cluster.

- Automated Compliance Scanning and Reporting: Implement tools that automatically scan Kubernetes configurations and workloads for compliance.

- Regular Audits and Reporting: Conduct regular audits with Kubernetes audit logging turned on, to ensure ongoing compliance. Maintain logs and reports for auditing purposes, which is crucial for demonstrating compliance to regulatory bodies.

Integrating Compliance in CI/CD Pipelines

Incorporating compliance into Continuous Integration/Continuous Deployment (CI/CD) pipelines is a critical aspect of maintaining robust security practices in software development, especially in Kubernetes environments. This approach ensures that applications not only meet the functional requirements but also adhere to security and regulatory standards throughout the development lifecycle.

- Use RAD Security with GitHub Actions

Helpful comparisons

Runtime vs real-time KSPM

Oftentimes, the automatic association of anything ‘real-time’ in cloud native security is ‘run-time.’ But an application does not run in isolation, or outside of its associated infrastructure. In contrast, it runs under a set of parameters, for example, whether the Kubernetes API tells it to run or not, based on a scheduler. In terms of a running workload, an important piece of context is the Kubernetes configuration attached to it. That is what real-time KSPM refers to. For example, is this workload allowed to run in privileged mode, or with cluster admin RBAC privileges? These controls live at the Kubernetes API layer.

In contrast, runtime itself, in Kubernetes, is a process run by the Kubelet, and encompasses the activity from the Linux (or Windows) kernel of the server (with the Container Runtime Interface, or CRI, and another runtime engine, like Containerd and CRI-O, in between). From a security perspective, runtime, in a silo, is better than KSPM, about telling you what is happening within your application, like if you have malware in the code, for example. That being said, the two must work in tandem. If you’re looking at runtime alerts for workloads that are locked down from a Kubernetes perspective, that is important information that will help you prioritize an investigation.

Cloud security vs K8s Security

Many times, Kubernetes Security is confused with cloud security, and there is an assumption that ‘what works for the cloud will work for Kubernetes - in the end of the day, it’s all configurations, right?’ Wrong. A Kubernetes workload changes every 5 minutes, on average. A cloud account’s configurations change weekly, at best - but sometimes they don’t change for months at a time. You can’t apply a cloud security methodology to securing Kubernetes, because by the time you go to fix a misconfiguration, the workload it is attached to will have already disappeared. And then you have no information about transpired in that workload between polling intervals.

Container vs K8s Security

Kubernetes is an orchestration tool for containers, but it is not the case that if you have container security, you are set for Kubernetes at the same time. For example, take authentication and authorization. If you have cluster admin, or other privileged access to your Kubernetes clusters, your container security won’t help or prevent an attacker from getting a container to do what they want. And then there is the simple fact that, if Kubernetes is misconfigured, container security won’t stop an attacker from getting access to the Kubernetes API, escaping containers, and doing what they want. You definitely need to have elements of container security to protect your clusters, like image scanning and runtime controls, but container and Kubernetes security are not interchangeable.

- K8s Security != Container Security

Kubernetes Security vs GitOps & IAC

GitOps and Infrastructure as Code (IaC) scanning are key players in putting up effective security guardrails for Kubernetes. But they will not tell you whether or not your cluster’s actual activity matches what is in the code that has been defined. It is possible that somebody has come in later in the cycle to move something around manually, and it is also possible that things were not coded as intended, on accident. There is, of course, also the issue of malicious activity, or malicious insiders, who are using their privileges for the wrong purposes (or accidentally).

- Where GitOps and IaC Fall Short in Kubernetes Security

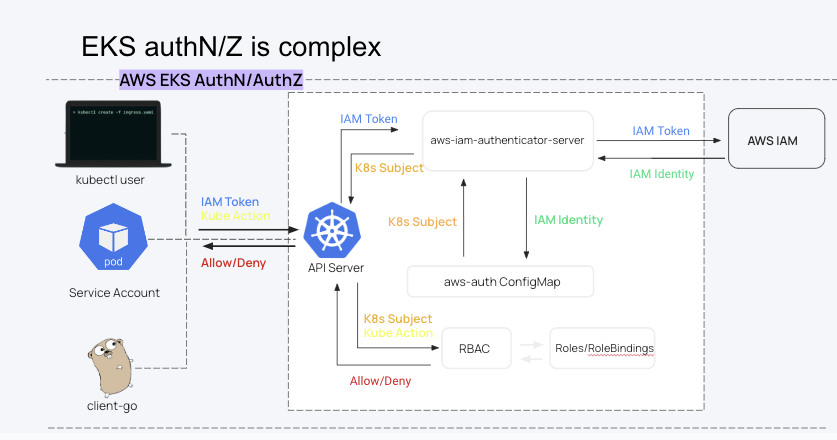

Cloud IAM vs Kubernetes RBAC

Cloud IAM and Kubernetes RBAC work together to perform authentication and authorization into Kubernetes clusters, but each will not get you the other. The ConfigMap does the hard work of translating an IAM role (authentication) to an RBAC role or service account (authorization). There have been attacks that are targeting kubeconfig files, to get these kinds of keys to the kingdom. The most important thing is to be able to view both of them in a connected, real-time attack path.

- Kubernetes RBAC vs Cloud IAM: What’s the Difference?

Kubernetes Security Operations Center (RAD Security)

RAD Security maps the broad components of Kubernetes in real-time for accurate cloud native identity threat detection, risk and incident detection. This includes features like real-time KSPM, attack paths that span cloud native infra to runtime, and cloud native identity threat detection.

We can reduce the noise to signal ratio by 98% or more, showing your top risk across all your clusters in less than 5 minutes, supporting your vulnerability management program, zero trust initiatives, and cloud native detection and response.

Reach out to the team for a demo today:

Resources

Webinars and White Papers

https://RAD Security.com/resources

Security Blog

Solutions

Cloud Native Attack Surface Visibility